Traceability has tremendous value for companies operating in any industry, but it’s critical for those in regulated industries. Regulatory bodies recognize its impact on product quality and safety, which is why traceability guidelines are included in several government regulations and international standards — FDA 21 CFR Part 820, IEC 62304, and ISO 13485, just to name a few. So for regulated industries, traceability isn’t just “nice to have” — it’s actually a regulatory requirement. Despite this fact, many companies treat it as just another item on the auditor’s checklist. A large percentage put off traceability tasks until the end of the process, and then go through the tedious, manual process of connecting all of the product artifacts and assembling a traceability matrix. They know they may have missed a few (or more), so come audit time, they cross their fingers and hope for the best. If they're “lucky,” the auditor picks the artifacts that are traced — but then they end up crossing their fingers again, hoping no undiscovered hazards are hiding in the final product. If they're not lucky, they get slapped with warning letters, delays, and the potential of some very steep fines. Building the trace matrix at the end of the development process defeats the whole goal of traceability — ensuring product quality and safety by effectively managing change. What’s more, putting it off could be costing significant amounts of time and money. If these companies implemented integrated traceability, however, they could improve product quality, cut costs, and spend far less time and effort assembling the reports auditors require.

What Is Integrated Traceability?

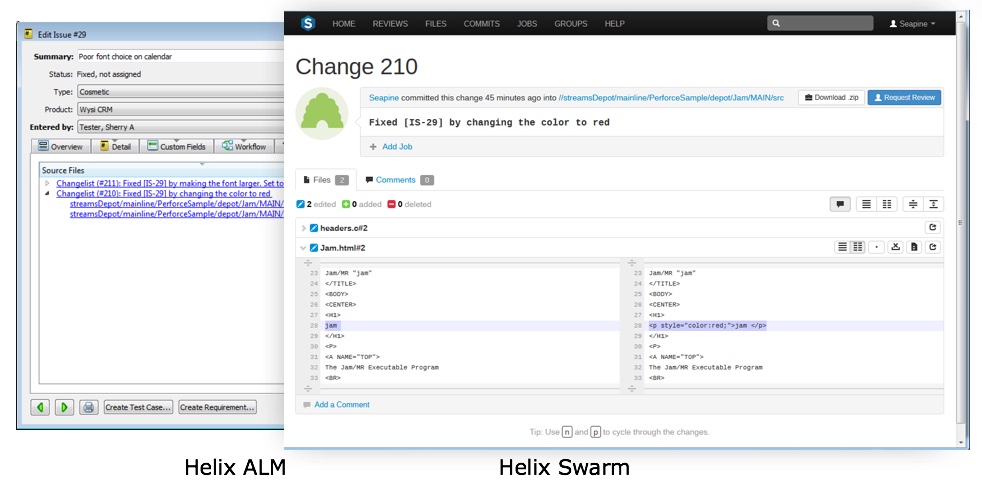

Integrated traceability is the ability to attain to-the-minute status information on every aspect of the product development lifecycle. Traceability links all artifacts contributing to the development of your product. It makes it easy to analyze data, generate traceability reports, and keep a weather eye on the project’s status. To be effective, integrated traceability requires the implementation of a good traceability strategy, along with a software tool like TestTrack to provide a live stream of information.

Benefits

Because integrated traceability begins when the project begins, it offers a host of benefits throughout the development process — from design reviews to risk analysis, gap analysis to verification and validation.

Design Review

Integrated traceability aids in design reviews by making it easy to understand requirements decomposition through linking. For example, marketing requirements link to product requirements, which link to system specifications.

Risk Analysis

By the same measure, integrated traceability can also aid in identifying risk-based and safety-based requirements by including risk and hazard analysis within the traceability implementation. Development teams can also identify the software code that ties to each design, technical, or software specification, making it much easier to identify high-risk code and where each requirement is implemented in the code.

Gap Analysis

Traceability also helps you identify gaps in documentation, testing, and risk analysis. Seeing these gaps enables teams to ensure they’re filled by the time an auditor comes knocking at the door. By having this information at their fingertips, they now have an easy way to provide evidence for any audits that pop up unexpectedly. This was particularly helpful for a Seapine client who arrived at his office to find he was having a surprise audit that day. Because of the confidence he had in the company’s implementation of integrated traceability, he was able to deliver information to the auditor faster, which in turn reduced the time it took for the audit. He said it was the fastest that he ever had an auditor in and out of the building.

Verification and Validation

Finally, integrated traceability helps to ensure that requirements are implemented correctly both from a verification and validation point of view. Teams can quickly make sure the requirement has been implemented correctly and the product functions for the user as intended.

Solve Problems Early

As the General Principles of Software Validation state, the vast majority of software problems can be traced to errors made during the design and development process. Integrated traceability gives development teams the power to spot problems early, so they can correct them while the cost and effort to do so is low. And as for the tedious process of assembling the trace matrix? Because they're maintaining traceability throughout the process, they can generate a matrix on demand, at any point. In TestTrack, it takes just a couple of clicks.

Learn More

An integrated traceability solution can help companies bring quality products to market more quickly, safely, and profitably. To learn how, download our white paper, 5 Ways to Bring Quality Software Products to Market Faster.